R-Tuning Large Language Models to Confront Hallucination

Large Language Models (LLMs) have undeniably transformed the landscape of natural language understanding and generation. However, their remarkable capabilities come with a challenge — the possibility to generate non-existent facts, a phenomenon termed hallucination. In a recent breakthrough, researchers have introduced a novel approach called Refusal-Aware Instruction Tuning (R-Tuning) to tackle this issue head-on. This article focusses the key insights and contributions of R-Tuning, and its impacts on the future of language models.

Hallucination in language models refers to their tendency to fabricate information, generating responses that may sound possible but lack factual basis. This poses a significant hurdle in applications where accuracy and reliability are significant. R-Tuning addresses this challenge by redefining the way language models are fine-tuned.

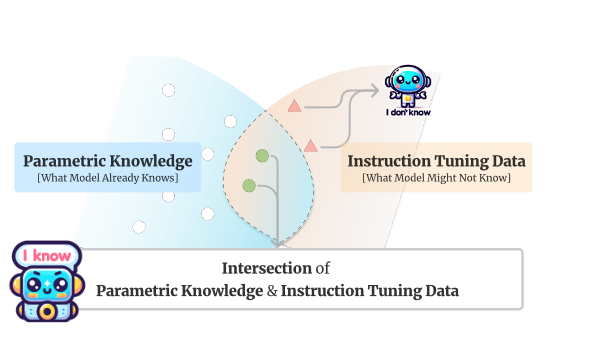

The foundation of R-Tuning lies in the recognition of a substantial knowledge gap between human-labeled instruction tuning datasets and the inherent knowledge embedded within LLMs during pre-training. While pre-training equips models with a vast volume of factual knowledge, fine-tuning introduces data that may extend beyond their parametric knowledge.

The Two-Step Process of R-Tuning:

- Measuring the Knowledge Gap:

- R-Tuning begins by measuring the knowledge gap between parametric knowledge and instruction tuning data. The goal is to identify uncertain questions, those that lie outside the model’s knowledge boundary.

- Through inference on the training data and comparing predictions with labels, uncertain data is split into uncertain (D0) and certain (D1) subsets.

2. Constructing Refusal-Aware Data:

- Refusal-aware data is constructed by adding uncertainty expressions after the label words in the training data.

- The model is then fine-tuned on this refusal-aware data, imparting it with the ability to refrain from responding to questions beyond its parametric knowledge.

R-Tuning’s effectiveness is demonstrated through both single-task and multi-task experiments across seven datasets. In single-task scenarios, the model exhibits an improved ability to refuse uncertain questions while enhancing accuracy for questions within its knowledge boundary. In multi-task settings, R-Tuning not only outperforms on in-domain datasets but also showcases superior generalization on out-of-domain datasets.

The intriguing aspect of R-Tuning is its recognition of the model’s refusal ability as a meta-skill — a skill that transcends specific tasks and can be enhanced through multi-task training. By incorporating uncertainty learning into the training process, R-Tuning not only refines the model’s estimation of uncertainty but also improves its overall performance in answering questions.

Refusal-Aware Instruction Tuning emerges as a promising solution to the hallucination problem plaguing large language models. By allowing models to articulate their knowledge boundaries and refuse unknown questions, R-Tuning paves the way for more reliable and accurate language understanding. As we navigate the evolving landscape of language models, R-Tuning stands out as a pivotal step towards unleashing the true potential of these powerful tools.